IndexFiguresTables |

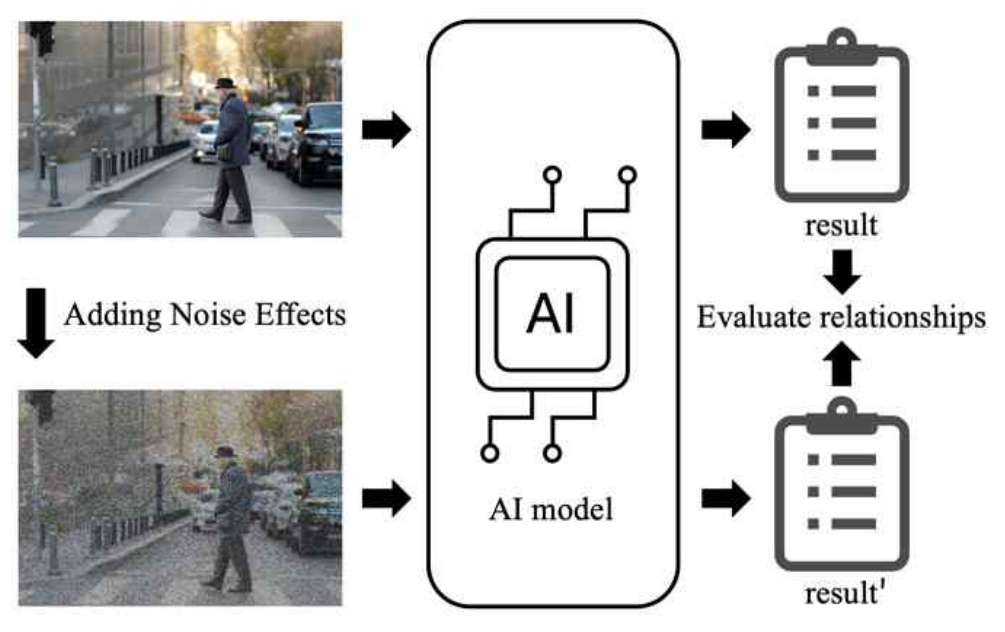

Jun-Cheol Suh♦ , Jung-Been Lee* , Jeong-Dong Kim* and Taek Lee°Metamorphic Test-Based Robustness Evaluation of Object Detection Models in Safety Critical SystemsAbstract: In recent years, artificial intelligence (AI) technology has made rapid progress and is widely used in various fields such as natural language processing and computer vision. However, the black-box nature of AI models raises reliability concerns, particularly in safety-critical systems (ScSs) such as autonomous vehicles (AVs) and medical systems, where understanding model behavior is crucial. This study proposes a robustness evaluation method for AV object detection models (ODMs) by integrating metamorphic testing and adversarial attack techniques. Using a YOLOv5-based ODM, the model was tested to detect essential objects in traffic safety scenarios, including pedestrians, traffic lights, and road signs. Various image augmentations simulating physical deformations (e.g., rotation, tilt) and weather conditions (e.g., snow, rain) were applied to measure model robustness. Results showed that model performance varied significantly with specific augmentations, revealing vulnerabilities. Notably, the pedestrian detection model, despite its high accuracy during training, showed only 35.8% robustness against augmented images. This highlights that even high-performing models in general conditions may fail under diverse environmental factors. These findings underscore the importance of rigorous robustness evaluation for AI models in ScSs to ensure reliability and safety in real-world applications like AVs. Keywords: object detection , metamorphic test , robustness evaluation , safety critical system , adversarial attacks Ⅰ. IntroductionIn recent years, artificial intelligence (AI) technology has evolved and has become widely used in several fields, including natural language processing, computer vision, and the arts. In particular, the field of computer vision has witnessed widespread application of technologies such as self-driving cars and intelligent CCTV[1,2]. These technologies have revolutionized our daily lives and played an important role in traffic safety systems. However, owing to the black-box nature of AI models, it is not possible to understand them fully, which raises the issue of reliability[3]. Particularly in ScSs, which are directly related to human life, such as autonomous vehicles (AVs) and medical systems, it is important to understand the reliability of these model, because malfunctions in these systems can cause serious human accidents[4]. Unlike traditional classical software, AI models are highly complex, learn and predict based on data, and react unexpectedly to various environments and situations. Therefore, thorough testing is essential to ensure the reliability and stability of AI systems[5-7]. Traditional testing methods for classical software do not fully reflect the characteristics of AI systems; therefore, a new approach is required. Because the models at the core of AI systems rely heavily on the data provided, any bias or degradation in data quality can significantly degrade the performance. To address these problems, metamorphic testing (MMT) techniques and traditional testing methods, such as neuron coverage testing and maximum safety radius testing, have been studied[8]. These techniques test the response of the model in different ways to evaluate its performance in the real world more accurately and quantitatively. For instance, most studies on object detection models (ODMs) for AVs discuss the test sufficiency based on the accuracy of the detection model; however, this is only a performance metric for a limited test set and not a test of the robustness of the model to unknown input noise. In addition, studies that discuss test sufficiency using the concept of test coverage of activated neurons in AI models have a limitation in that the number of test cases cannot be continuously increased to improve coverage because of cost; the method of measuring coverage is time-consuming and focuses only on the percentage of neuron activation, making it difficult to evaluate the sufficiency of ensuring reliability from the perspective of critical application services.This study proposes a testing methodology that borrows ideas from the MMT technique[9] and adversarial attacks (AA)[10] to evaluate the robustness of AI models, which can be effectively applied even in the absence of clear criteria. As shown in Figure 1, MMT is a technique for identifying errors in software testing by verifying that the expected results are maintained when the input is varied. AA is an attack technique that intentionally fools the predictions of a model by adding noise to the input data, thereby causing the deep learning (DL) model to misbehave. In this study, based on the previous study[11], we added image augmentation techniques to generate test cases and expanded the depth of the performance evaluation comparison by object. Assuming an AV environment, we define pedestrians, traffic signs, and traffic lights as dangerous objects, and train an ODM to detect them for testing purposes. Because various unpredictable situations can occur in the real world, we evaluated the detection ability of the model by augmenting the limited input data with MMT techniques that can evaluate the model without a test oracle and compared the difference in the model outcomes between the augmented and original images. In the proposed MMT technique, a robustness indicator is presented to allow users to intuitively determine the appropriateness of testing through a random image selection transformation approach. Using the MMT method, which uses image augmentation to generate test cases that can occur in the real world for a given AI application service, it is easy to generate cost-effective test cases, easy for users to intuitively understand the strengths and weaknesses of the model (which types of noise it is strong or weak against), and easy to measure the sufficiency of the test. The contributions of this study are summarized as follows: · A novel robustness metric was defined to quantitatively evaluate the robustness of the model, and more than 100 iterative randomized tests were performed for each test scenario to ensure the generality of the metric evaluation through a comparison analysis. · Proposed a methodology to evaluate the robustness of ODMs in ScSs by utilizing MMT techniques. · Tests were performed on object detection models assuming autonomous vehicles (AVs), considering various weather and vision distortion scenarios that could occur in real-world road conditions. Ⅱ. Related WorkSeveral studies have been conducted by the academic community on the use of MMT to test AI systems Tao et al. (2021) used a real-world image recognition application known as Calorie Mama as an example to demonstrate how MMT can increase the efficiency of testing and improve system performance by transforming data. This study explored the applicability of MMT to image recognition and proposed a new approach to ensure the quality of AI software[12]. Kanstrén (2020) applied various image deformation techniques (e.g., illumination changes and geometric distortions) to an object detection system used in autonomous driving and showed that MMT was effective in assessing the robustness of the system. In particular, this study explored the applicability of MMT by including not only computer vision models but also data from various sensors, such as light detection and ranging (LiDAR), and proposed a practical approach to ensure the reliability of autonomous AI systems that integrate multiple sensors in highly variable real-world environments[13]. Na et al. (2022) proposed a metamorphic testing case generation technique for convolutional neural network (CNN) image classification models to validate the performance of CNN-based models in real- world input environments. By transforming the test set with certain rules and then checking whether the output violates the metamorphic relationship (MR), this study enabled the evaluation of whether a CNN model could maintain consistent performance in different environments. This approach was effective in verifying the reliability of image classification models and played an important role in ensuring their robustness in real-world settings[14]. Wang et al. (2019) proposed a deformation testing system known as MetaOD to systematically reveal possible detection errors in deep neural network-based object detection systems. The system was designed to check whether the object detection results of the original and synthetic images were consistent using the images synthesized by inserting additional objects into the original image. MetaOD found tens of thousands of detection flaws in commercially available ODMs and pre-trained models, and by retraining the models using the synthetic images that caused the false detections, MetaOD improved the mean average precision (mAP) score from 9.3 to 10.5[15,16]. MetaOD can detect several object detection errors in both original and synthetic images. In this study, we propose a method for quantitatively evaluating evaluating the robustness of core ODMs in ScSs by extending the techniques of existing MMT studies. We simulated various real-world road conditions by applying visual deformations, such as rotation and tilt, as well as complex weather effects, such as rainfall, snowfall, and flares, and quantified the changes in the robustness of the model under these deformations. By measuring the robustness of AI models in a more specific and quantitative manner than in previous studies, we can develop a framework to assess the real-world applicability of autonomous driving systems more reliably. We believe that our findings go beyond simply evaluating the accuracy of the model, clearly revealing performance changes due to various environmental factors and physical deformations and provide a direction for enhancing the reliability of AI systems within ScSs. Because ensuring the safety and robustness of AI models is essential, particularly in the field of autonomous driving, this study is important as a basis for designing and improving models that can maintain stable performance under various adverse conditions that may occur in real- world road environments. In addition, although MMT has been studied previously, this study is unique in that it extends the image augmentation testing technique specialized for AV ODMs and proposes and visualizes object-specific robustness measures. In addition, although MMT has been studied previously, this study is unique in that it extends the image augmentation testing technique specialized for AV ODMs and proposes and visualizes object-specific robustness measures. Unlike prior studies that focused on qualitative assessments, our research quantitatively evaluates ODM robustness under diverse real-world conditions, providing a more practical approach for AV reliability. Ⅲ. Background3.1 Object Detection Models for Autonomous VehiclesAVs are complex systems that integrate advanced technologies. Computer vision and camera technology play key roles in recognizing the driving environment and collecting data[16]. The AI model of an AV utilizes various sensors and algorithms to understand road conditions in real time, plan the driving route, and control the vehicle. In this process, cameras collect visual information, and computer vision algorithms analyze this information to make various decisions necessary for driving[17]. Computer vision acts as the “eyes” of the self-driving car. It analyzes image data from cameras to recognize objects on the road, detect lanes, and identify traffic signals and signs. This is primarily achieved through DL-based algorithms. CNNs are commonly used because they excel at extracting various features from images and classifying objects based on these features. Object recognition is an important task in AVs. Detecting and classifying vehicles, pedestrians, bicycles, and animals on the road in real time is essential for safe driving. Models such as You Only Look Once (YOLO), single shot multibox detector, and region- based CNNs have been used for this purpose. These models either analyze the entire image at once or first identify specific regions first and then analyze them in detail to recognize objects. This process requires high accuracy and fast processing speed[18-20]. In addition to cameras, AVs are equipped with various sensors, including LiDAR, radar, and ultrasonic sensors. Each sensor has its strengths and weaknesses, and data fusion can provide complementary information. For instance, LiDAR uses lasers to create high-precision three-dimensional maps that useful for pinpointing the exact locations of objects. Radar uses radio waves to detect objects over long distances and is less affected by weather or lighting conditions. Ultrasonic sensors are suitable for close-range object detection and are often used in parking assist systems. Data from these sensors can be combined to achieve more accurate and reliable environmental recognition[17,21]. Camera technology plays a key role in providing visual information to AVs. High-resolution cameras provide higher-resolution images, enabling more precise object recognition and environmental awareness. Multispectral cameras utilize infrared and ultraviolet spectra in addition to visible light to ensure reliable perception under various conditions. Advances in camera technologies have significantly improved the safety and efficiency of AVs[21]. In this study, we focused on a research topic of object detection using camera technology, emphasizing the most used vision recognition technologies in the field. 3.2 Theory behind the Metamorphic TestMMT is becoming an important methodology in the field of software testing, particularly in cases that are difficult to address using traditional testing techniques. MMT is a methodology for identifying defects in software systems by predicting and comparing expected changes in the output as the input data changes. It also plays an important role in the validation of AI and machine learning models[8]. The core idea of MMT is that rather than verifying that the software provides the correct output for a given input, we define a set of transformed inputs and their expected outputs and compare them to assess the consistency and reliability of the system. In this process, the relationship between the input and output before and after the transformation is known as a MR. MRs are defined to ensure that the software behaves consistently with respect to the transformed input and can include several properties, including consistency, idempotence, and inverse relations. Consistency implies that the transformed inputs for the same task should produce consistent outputs; idempotence implies that the same input should maintain the same result even if transformed multiple times; and inverse relationships imply that certain transformations of the input should return to the original input when applied in reverse[8,22]. The benefits of MMT include improved detection, test automation, and expanded test coverage. MR can effectively detect defects that are difficult to detect using traditional test cases alone, and because the process of transforming inputs and comparing results can be automated, it can be easily applied to large datasets and complex models. In addition, various variation techniques can be used to simulate various environments and situations for testing[8]. The MMT process comprises the following steps: ① Define an appropriate MR that fits the characteristics and requirements of the test system. For instance, in an ODM for an AV, the MR can be set by applying transformations such as rotation, blurring, and brightening of the image. ② Generate new test inputs by applying transformations based on MRs to the original input data. ③ Verify that the MR is satisfied by comparing the output of the system for the original input and the transformed input. ④ If the MR is not satisfied, it is considered a defect in the system and further analyzed to identify the cause. In this study, MMT was applied to evaluate the ODM robustness related to the safety of AVs, as shown in Figure 2. Specifically, various image deformation techniques were applied to ODMs that detected pedestrians, traffic lights, and traffic signs to set the MR, and the detection results before and after deformation were compared. This allowed us to identify potential defects in the model and quantitatively evaluate its robustness. The application of MMT techniques plays an important role in improving the reliability of ODMs in safety-sensitive systems such as AVs and can contribute to the realization of safe and reliable AI systems in real life. Ⅳ. Experimental Setup4.1 Test Model ConstructionAs shown in Figure 2, the target objects are traffic lights, traffic signs, and pedestrians, assuming the situation of an AV. A total of 18,000 traffic light data and 19,000 traffic sign data points provided by AI Hub (https://www.aihub.or.kr) and 15,000 pedestrian data provided by Roboflow (https://roboflow.com) were used to train a YOLOv5 model to construct three ODMs with pedestrians, traffic lights, and traffic signs as target objects. As a result of the training, we constructed three AI models for testing with accuracy rates of 93.2% for the pedestrian detection model, 98.2% for the traffic light detection model, and 67.8% for the traffic sign detection model. 4.2 Test ScenariosA total of seven test scenarios were organized, assuming situations that are likely to occur in a typical driving environment when detecting pedestrians, traffic lights, and traffic signs. Details are summarized in Table 1. Table 1. Image augmentation scenarios

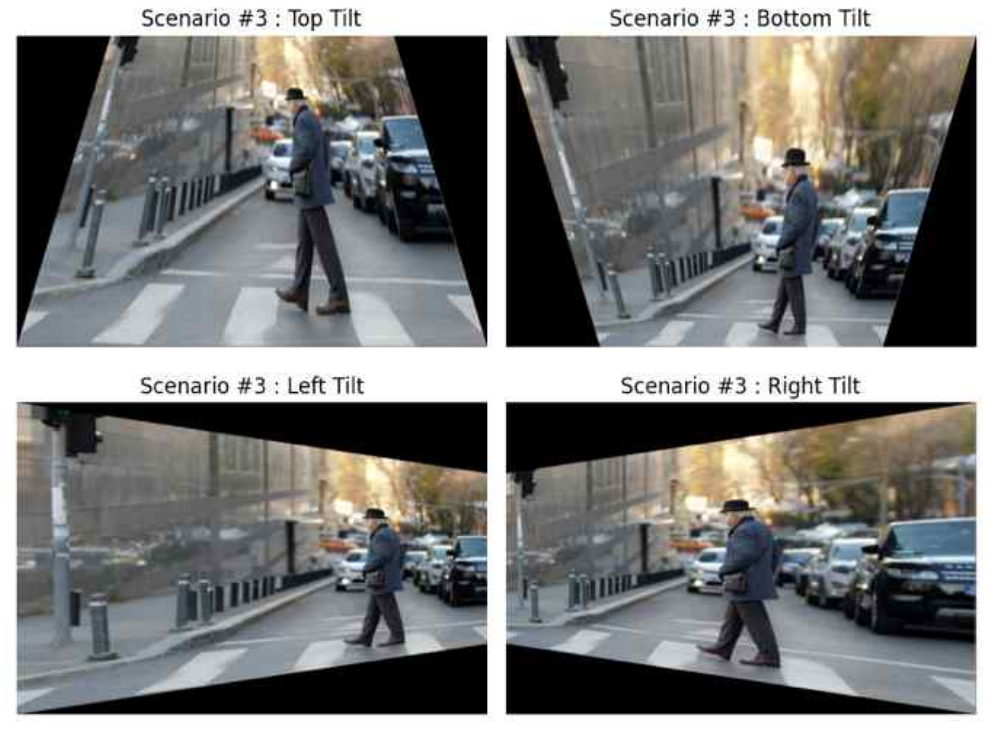

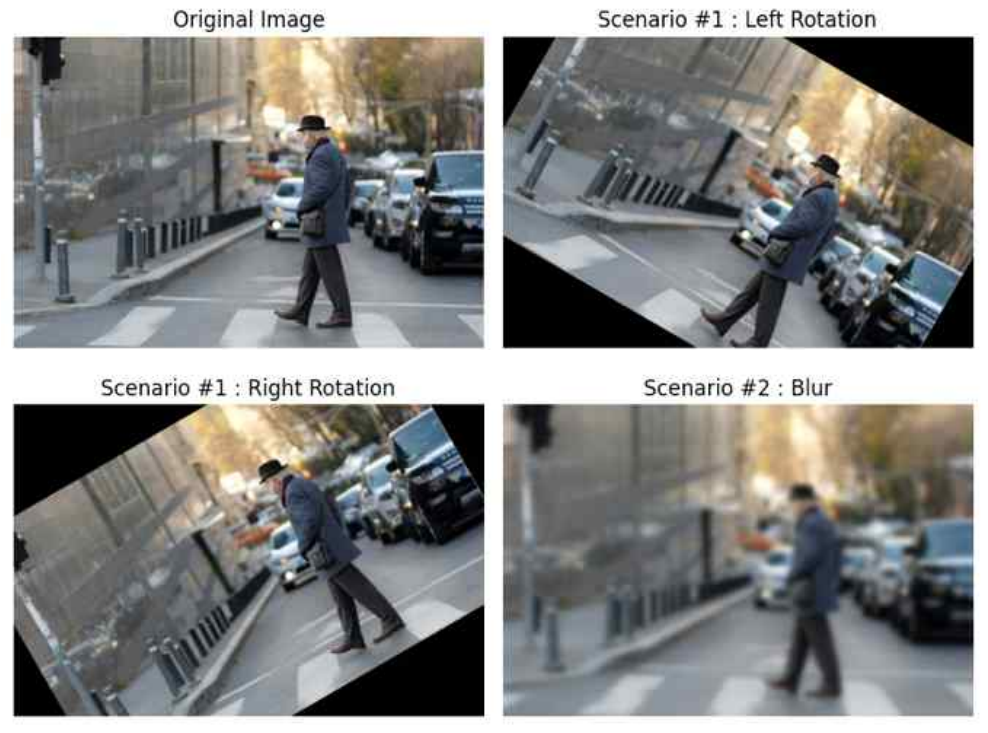

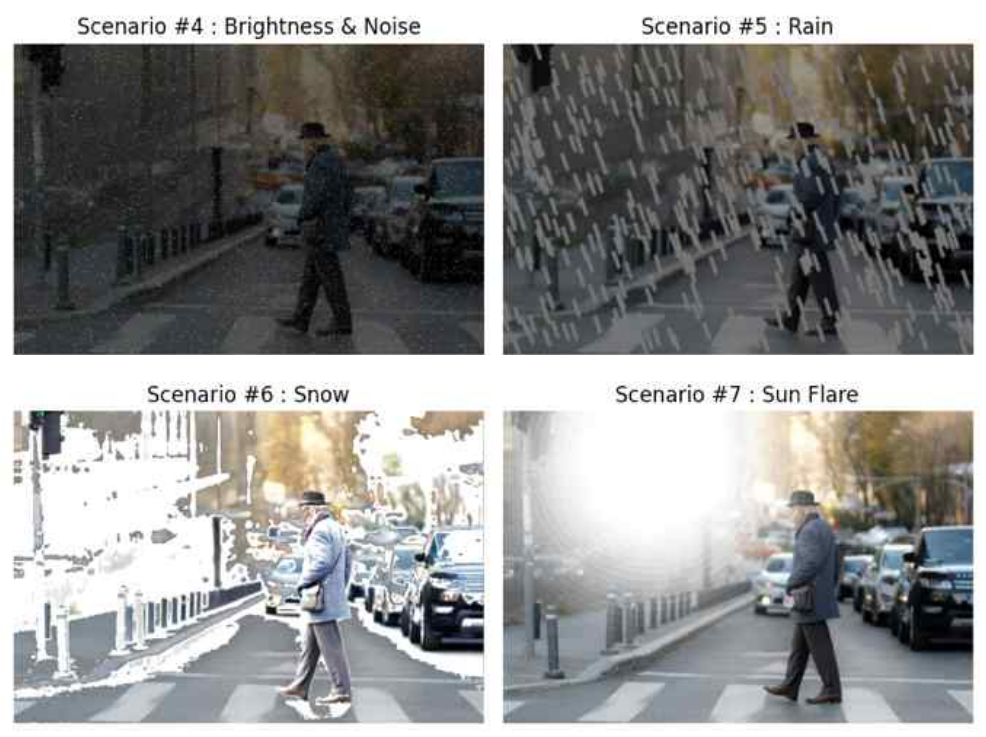

Based on the scenario listed in Table 1, Python’s OpenCV and Albumentations were used to automatically transform the original image and input it into the model for testing. The rotation was performed by [TeX:] $$5^{\circ}$$ left and right to a total of [TeX:] $$175^{\circ}$$, and the effects of blur, image brightness reduction, and tilt were applied from steps 1 to 10. Weather effects such as rain, snow, and flare were sequentially increased in intensity from steps 1 to 5, and the results were saved after inputting them into the model. Examples of image conversions are shown in Figures 3-5. 4.3 Testing ModelsEquation (1) is designed to calculate the degree of robustness by comparing the results of the original and augmented images.

As shown in Figure 2 and Equation (1), [TeX:] $$C_{i, j}$$ is a binary variable that indicates whether the result of the j-th transformation method matches the original result for the i-th image. It is set to 1 if the result of processing method j matches the original result of image i and 0 otherwise. M denotes the number of image augmentations based on the scenario. N denotes the number of images used for testing. In this experiment, a total of seven scenarios were considered, of which two were rotated left and right, and four were skewed up, down, left, and right; therefore, the value of M in the experiment was 11. Because we randomly extracted 50 images from the test dataset, the N value was 50. Based on the above formula, we can determine the robustness of each variant and average them to derive the R-value, which is the robustness of N images. However, N randomly selected images may be biased toward a particular outcome; therefore, describing the characteristics of the population is difficult. To solve this problem, we can use the entire test dataset, but if the test dataset represents real-world self-driving, we cannot test every situation in the real world; therefore, we have formulated a formula that averages the R-value over sufficient iterations to approximate the average of the population.

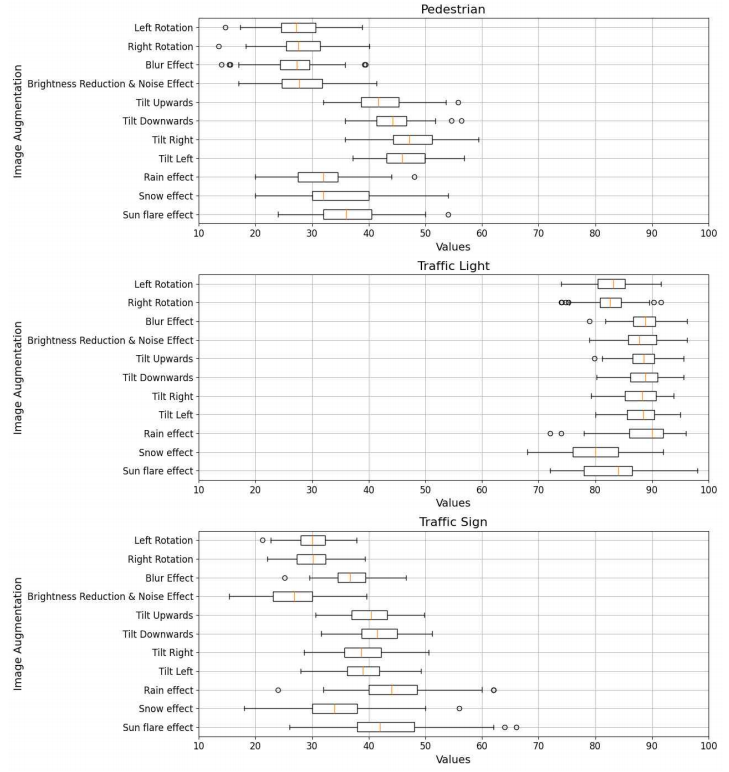

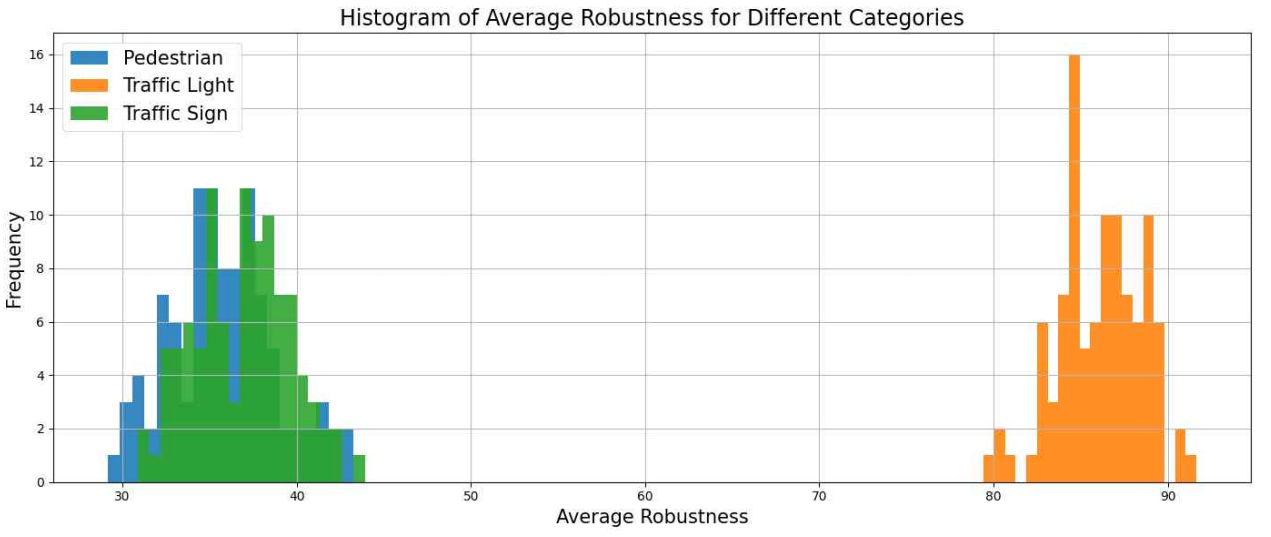

[TeX:] $$R_k$$ denotes the test result obtained in the k-th test, that is, the average robustness. n denotes the number of test iterations, that is, the total number of iterations required to perform the test on the randomly extracted images. In this experiment, 50 randomly selected images were tested, and the process was repeated 100 times; thus, the value of n was 100. By repeating the above process of finding the average robustness n times and dividing it by n, we can determine the robustness of model [TeX:] $$\hat{R} .$$ 4.4 Experimental environmentsThe experimental conditions were as follows: The training and test data were image data sourced from the AI Hub and ROBO flow. The testing models were implemented using YOLO v5. The mAP of each model is as follows: traffic light detection model, 98.2% (mAP); pedestrian detection model, 93.2% (mAP); and traffic-sign detection model, 67.8% (mAP). The experiment was executed on Google Colab with a hardware A100 GPU and software packages YOLOv5, OpenCV, and Albumentation. Ⅴ. Experiment Result5.1 Experiment ResultThe experimental results are summarized in Table 2. Model robustness implies that, for any set of distortion techniques, the model maintains the same performance over 100 distortion tests at an average robustness value. For instance, the robustness of 35.8% for the pedestrian model in Table 2 indicates that the model performed equally well, on average 36 times out of 100 distortion tests. Robustness refers to the ability of a model to produce the same detection results as the original image, even when the model is injected with a scenario-specific distorted image. The pedestrian detection model achieved 93.2% accuracy; however, its robustness was the lowest at 35.8%. The traffic light detection model had a high accuracy of 98.2% and robustness of 86.09%, whereas the sign model had an accuracy of 67.8% and robustness of 36.87%. Notably, the robustness of the pedestrian model was lower than that of the sign model, with an accuracy of 67.8% and median of 37.06% for the sign model. Finally, the pedestrian model was the most vulnerable to the left rotation deformation, the traffic light model to the snow effect, and the sign model to the brightness reduction and noise effects. Table 2. Overall Experiment Result

5.2 Result Interpretation by ObjectAs summarized in Table 2, the relationship between the accuracy and robustness of the target detection was not as expected. In Figures 6 and 7, we can observe that the mAP of the pedestrian model is similar to that of the traffic light, but its robustness distribution and average robustness for each variant are similar to those of the traffic sign, with even lower minimum values. The pedestrian detection model exhibited the highest accuracy (93.2%), but the lowest robustness (35.8%). This result is even lower than that of the sign model with 67.8% accuracy, which is contrary to the expectation that a model with a higher accuracy would be more robust. The traffic light detection model performed relatively well, with 98.2% accuracy and 86.09% robustness, which increases the likelihood that it will provide reliable results in real-world applications. This high performance suggests that the traffic light detection model is highly adaptable to various environmental factors. However, despite its high accuracy, the pedestrian model exhibited a relatively low robustness of 35.8%, indicating a lack of training effectiveness. This suggests that the pedestrian model is likely to be vulnerable to various deformations in real-world use, raising concerns regarding safe driving and pedestrian protection. These results demonstrate that model performance is not limited to mere accuracy and emphasize that robustness is an important evaluation factor in addition to accuracy, which can lead to counterintuitive results, particularly in the case of pedestrian models. Therefore, it is worth considering both accuracy and robustness when evaluating model performance and relying solely on accuracy for decision-making can be risky. This insight is expected to be an important factor for increasing the reliability of models in real- world applications. Ⅵ. ConclusionIn this study, we proposed a methodology for evaluating the robustness of models by borrowing the concepts of MMT and AA, which apply visual deformation techniques to ODM in ScSs. To present a concrete case study, we constructed a YOLOv5-based ODM to detect pedestrians, traffic lights, and traffic signs in an autonomous driving situation, and quantitatively calculated the average robustness of the model through various image augmentation test scenarios. We applied visual deformations such as rotation, blur, brightness degradation, noise, and tilt, as well as deformations reflecting various weather conditions such as rain, snow, and flares, to evaluate the responsiveness of the model to adverse conditions that may occur in real-world autonomous driving situations. The results demonstrated that the robustness of the models varied depending on the type and intensity of the deformations. In particular, the performance of the models tended to decrease significantly under certain weather conditions and physical deformations (flare and snow), as shown in Figure 6. These results revealed the vulnerability of the models to certain deformations, which can serve as an important basis for further research on improving the reliability of ODMs in ScSs. The results of this study provide an important example of the validity of the MMT technique for evaluating the robustness of AI models in the field of traffic safety, such as AVs. By simulating various scenarios and real-world road conditions, we closely examined the performance of the model, which can lead to improvements in AI models related to traffic safety. In future work, more sophisticated evaluations with more variants and test scenarios that are closer to real- world road conditions are required. In addition, robustness evaluation through data fusion with other sensors used in autonomous driving, such as LiDAR and radar, will be an important research topic[13]. Furthermore, developing training methodologies to compensate for model weaknesses based on the results of these robustness assessments remains an important challenge in improving the reliability of AI-based ScSs. BiographyBiographyJung-Been LeeFeb. 2011 : M.S. degree, Depar- tment of Computer and Radio Communications Engineering, Korea University. Aug. 2020 : Ph.D. degree, Depa- rtment of Computer Science and Engineering, Korea Uni- versity. Sept. 2020~Feb. 2023 : Research Prof., Chronobio- logy Institute, Korea University. Mar. 2023~Current : Assistant Prof., Division of Computer Science and Engineering, Sun Moon University. [Research Interests] Digital healthcare informatics, Sensor data mining, AI, Software Engineering [ORCID:0000-0002-8208-0387] BiographyJeong-Dong KimFeb. 2008: M.S. degree, Com- puter Science and Engineering, Korea University. Aug. 2012:Ph.D. degree, Com- puter Science and Engineering, Korea University. Mar. 2016~Current :Associate Professor in the Department of Computer Science and Engineering, Sun Moon University. [Research Interests] Deep Learning, Healthcare, Software & Data Engineering, and Bioinformatics. [ORCID:0000-0002-5113-221X] BiographyTaek LeeFeb. 2006:M.S. degree, Com- puter Science and Engineering, Korea University. Feb. 2016:Ph.D. degree, Com- puter Science and Engineering, Korea University. Sep. 2017~Feb. 2023:Assistant Professor, Department of Convergence Security Engineering, Sungshin Women’s University. Mar. 2023~Current : Assistant Professor, Division of Computer Science and Engineering, Sun Moon University. [Research Interests] information security, software engineering, machine learning and data mining. [ORCID:0000-0003-2277-8211] References

|

StatisticsCite this articleIEEE StyleJ. Suh, J. Lee, J. Kim, T. Lee, "Metamorphic Test-Based Robustness Evaluation of Object Detection Models in Safety Critical Systems," The Journal of Korean Institute of Communications and Information Sciences, vol. 50, no. 7, pp. 995-1006, 2025. DOI: 10.7840/kics.2025.50.7.995.

ACM Style Jun-Cheol Suh, Jung-Been Lee, Jeong-Dong Kim, and Taek Lee. 2025. Metamorphic Test-Based Robustness Evaluation of Object Detection Models in Safety Critical Systems. The Journal of Korean Institute of Communications and Information Sciences, 50, 7, (2025), 995-1006. DOI: 10.7840/kics.2025.50.7.995.

KICS Style Jun-Cheol Suh, Jung-Been Lee, Jeong-Dong Kim, Taek Lee, "Metamorphic Test-Based Robustness Evaluation of Object Detection Models in Safety Critical Systems," The Journal of Korean Institute of Communications and Information Sciences, vol. 50, no. 7, pp. 995-1006, 7. 2025. (https://doi.org/10.7840/kics.2025.50.7.995)

|

|||||||||||||||||||||||||||||||||||||